This is a revised version of the 20100103 post. Tom rightly commented on 2010110 that there was some confusion between data and information, and he warned me that if I ended up talking of insight, I would certainly get it wrong. I am lucky he did not mention knowledge and wisdom… Just to clarify things and avoid using big words, I have adapted this post: what I want to find is “files”, and what the search is based on is “text” contained in the files… where text could be numbers, i.e. years (1739). More generally, “text” is thus any alphanumeric string contained in the file, in the file name, or in the information fields. Some text may be “keywords”, but I shall cover this later.

This being said, how do you find files on your computer? If you have few files, and an easy and logical directory structure (mypictures, mymusic, myreports…), this should be a relatively easy task… but when it you have many files, say 10000 or hundred thousand, things get a bit tougher, particularly if you want to locate files by their names and by their contents.

This post is about software that was designed to help users retrieve files, such as Google Desktop, or Beagle, Tracker, Doodle, Strigi and Pinot. They are not equivalent, which is why they are difficult to compare; none of them actually offers what I am looking for. As I think I know what I would like to have, I decided to write this article. Maybe some day some people will start an open source project to develop the ideal search engine… Let me know, because I’ll joint them!

This post is subdivided into three parts.

- The first is a somehow detailed inventory of the types of files I have on my hard disk, as it is useful to know a bit better what you have on your hard disk if you want to optimize searches…

- The second provides a comparison of search tools, in terms of what they look for, how much memory they need, how big their index files can be and particularly what they find!

- The third is my wishlist, i.e. a description of the search engine I would like to have.

Before starting, pls note that, although my computer is a Dell Latitude D830 laptop running Ubuntu 9.10, much of the discussion below should be relevant and of interest to people using other operating systems as well!

1. Inventory of my hard disk

1.1. Directory structure

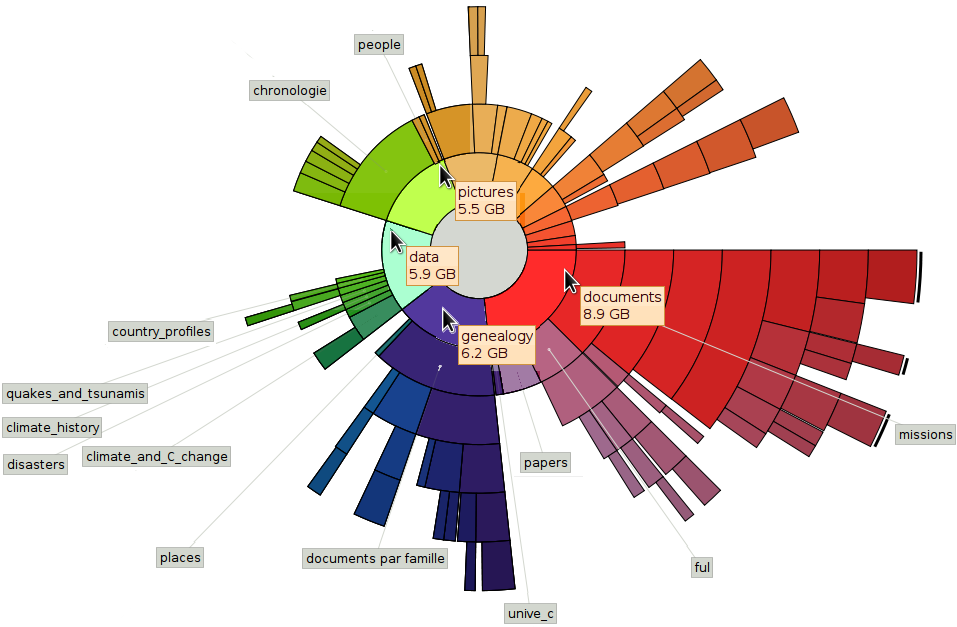

Hard disk structure, with the main directories documents, genealogy, data and pictures. help is the directory right of pictures. The figure also shows the depth of the data storage.

I have about 130000 user files and directories for a total of 40 GB on my computer, all kept in a directory called myfiles which, for security reasons corresponds to a separate partition on the hard disk. For other files, see endnote (1). Since I shall discuss only myfiles, I will refer to it as “the” disk, with directories, sub-directories, sub-sub-directories etc.

myfiles is subdivided into several directories with the following number of files and sub-directories:

• data, 16000 files in 150 sub-directories. This is where I keep documentation on various subjects I am interested in. There is, for instance, sub-directory 1739-40 with info about the severe winter that occured during those years; sub-directory risk with documents on risk management (countries at risk, globalisation of risk), a sub-directory volcanoes with sub-sub-directories pinatubo, nyiragongo, agung, etc.

• documents, 27000 files and 6 sub-directories; documents contains… documents which I have written (publications, powerpoint presentations…) together with all the supporting material. There is some overlapping with folder data and I may one day reorganise myfiles and merge them. This folder is where the deepest sub-sub-sub… directories (up to 8 levels below documents) are to be found.

• genealogy, 10000 files in 31 sub-directories, from ardennenschlacht to website.old; genealogy has documentation about my family, and related families, together with background about the familes, i.e. sources (scanned archives), historical information about localities where the families lived, gedcom files etc.

• help, 11000 files in three subdirectories called hardware, linux and software

• pictures, 9000 files

• programmes, 7000; this is the programmes which I have written, over the last 20 years or so

• storage, 41000: mainly linux and windows installation programmes

• website, 4000: the backup of my websites, and other material used on websites

• others, about 5000 files.

1.2 File types

The detailed inventory that follows covers 61419 files in the directories data, documents, genealogy and help only. Based on the extensions (suffixes of the file names), the disk contains 392 file types, plus a type without extension. If you think file extensions and file types are not the same thing, pls. see endnote (2). Some of the rarest extensions still seem familiar: loc, ltd, lu1, lu2, lu3 ,m3u ,memory, memory~, 2f, rot, sdw, snm, sys, tdm, tpl, uev, url, me~, idx, info~, stat, text, this~, val, var!

The 30 most common extensions are given in the table below.

The table reads as follows: the first column is the rank, considering that the files are sorted according to the disk space they occupy, or their volume (KBytes of 1024 bites). The largest volume is occupied by pdf files (3.87 GBytes), which is no surprise. The second column (Count rank) lists the files by their numbers. For instance, the most numerous files are csv files, followed by html. pdfs are only fifth.

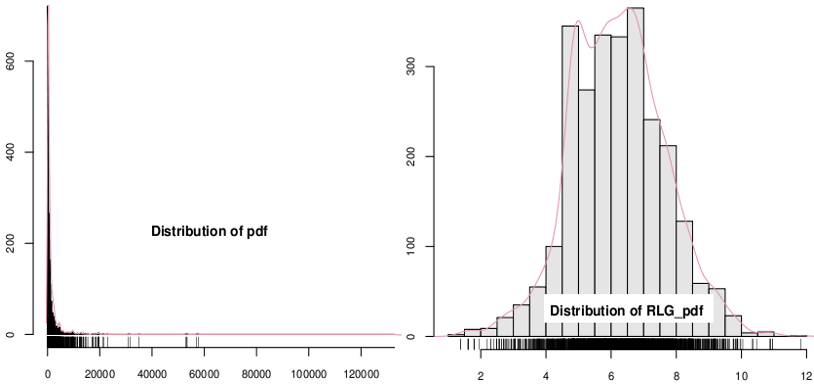

Absolute frequency distribution of the sizes of 2607 pdf files on my computer. Logarithmic scale on the right. Graphs prepared with Rattle 2.5.8.

“Average KB” is the average size of the file type. pdf files on average occupy just under 1.5 MByte (1.486), and they rank only 48th in terms of their size. The largest files are avi (only 82nd by number of files).

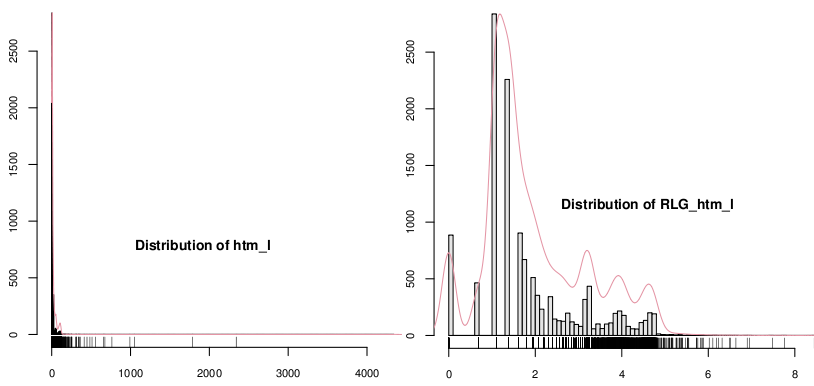

The median size usually markedly differs from the average: it is 2 to 100 times smaller, indicating a positive skew in the size distributions. What this means in practice is that there is a very large number of small files.

The next colum (Max KB) is the size of the largest file. For instance, the largest pdf peaks at 137 MBytes, but it is only the third largest (see col. Max Rank), the largest in absolute being an avi file of 571 MB, a BBC transmission on the “global warming swindle”. The last column is the 9th decile, i.e. the value of the file size that is exceeded only in 10% of the files. For instance, 90% of jpg images are smaller than 398 KBytes. The fact is that my disk, ans possibly many others, is full of many small files.

Note that I have included only the files in the directories where I actually look for files. For instance directory pictures contains about 9000 files, all of them but a couple of hundreds jpg and jpeg. This directory is not searched for files.

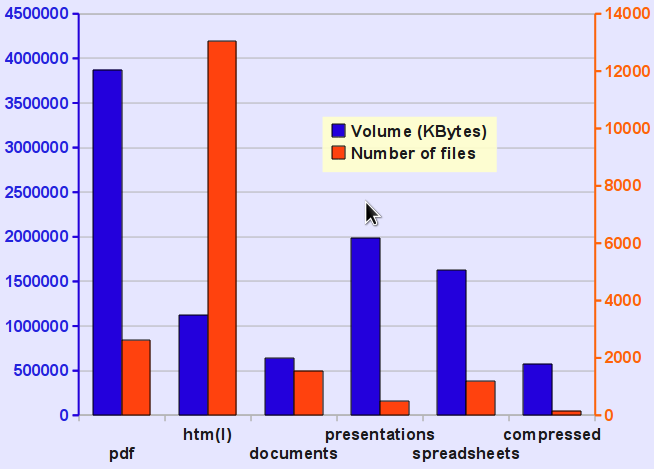

The files I actually want to search for text are essentially documents (1: odt, doc, docx), pdf (2) , saved webpages (3: htm, html) and, to some extent, presentations (4: odp, ppt, pptx) and, but rarely, spread

sheets (5: ods, gnumeric, xls, xlsx, gnumeric) or tarballs and compressed files (6: tar, tar.gz, tar.bz, rar, zip). Whatever system I will eventually select to find files must be able to search “office” documents, webpages and pdf. The three first types represent 25% of the volume of the files and 27% of their numbers. All 6 types make up 44% of the volume and 29% of the number of files.

Absolute frequency distribution of the sizes of 13037 htm and html files on my computer. Logarithmic scale on the right. Graphs prepared with Rattle 2.5.8.

The figure shows the volume and the number of the main file types that should be searchable with the search engines compared below.

2. Comparison of search engines

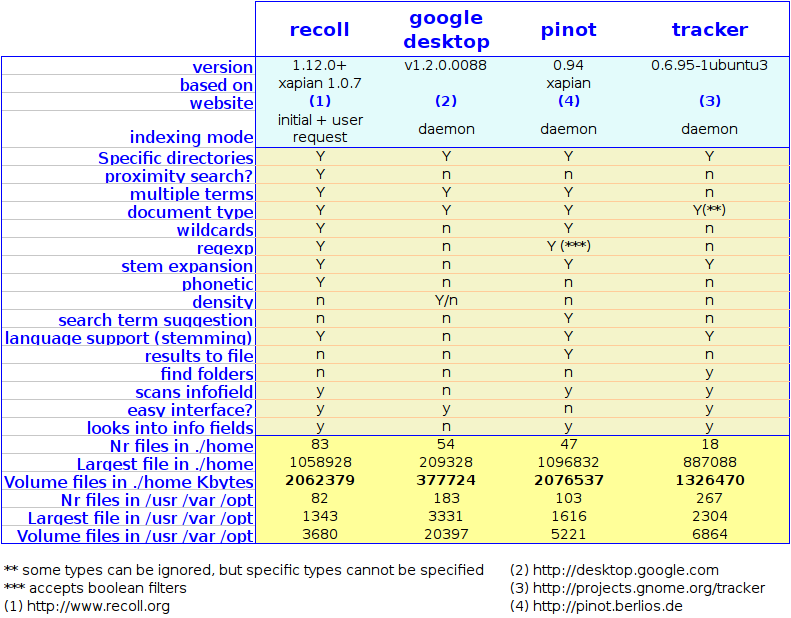

I have done a more or less systematic comparison of several search engines over a couple of months, including the following: recoll, tracker, google desktop, pinot, strigi, doodle, namazu. I have also used beagle some time ago, but then dropped it to use tracker instead. I never managed to make strigi work correctly, either through the gnome deskbar applet or with catfish, a generic interface for search engines. Same thing with namazu. Doodle is a specific tool to search the info fields of all kinds of files, but since many of the other search programmes can do that as well, I did not keep doodle on my computer. In the end, I remained thus with recoll, google desktop, pinot and tracker. The table below provides a general overview of the search engines.

Somewhat irrationally, I eliminate pinot because of its confused user interface. I say “irrationally” because other people may like the interface, but I don’t understand its logic, and I grew allergic to it. Tracker does not know wild cards nor regular expressions, and its user interface is ugly and “basic”. Some people may like the simplicity; I don’t. My preference goes to google desktop and to recoll. Google desktop occupies very little disk space, and it puts little strain on system resources (contrary to tracker, for instance, which can macroscopilly slow down my computer). But google desktop is a typical “for dummies” product. For instance, it can only search a limited number of file types (not by chance, they happen to be the most frequent types on my computer, i.e html, openoffice and MS office, and pdf!) Unfortunately, it does not scan info fields! One of the nice features is that search results can be sorted by “relevance”, but the concept is explained nowhere. It seems to be a mix of frequency of the searched words and a preference for MS Office files. For instance, a search of “food security” will first list the files where the words occur together, then separated, then food only and security only… I dropped a line to Google to find out how to interpret “relevance”, but where were too busy to answer.

My absolute preference goes to recoll, for several reasons:

- it does not use a daemon; instead, the user must update the indices every now (the updates are fast). The index can also e updated automatically at different time intervals through cron (insert 0 12 *** recollindex in crontab to update the index every day at 12!) or by compiling recoll with the –with-fam or –with-notify parameters;

- search capability is the most flexible of the four engines;

- it can do “proximity searches”, i.e. it can find “food security”, i.e. “security” immediately following “food”, or look for “food” and “security” separated by a certain number of words.

I am now going to uninstall all the serach engines from my computer. I am sure I will notice a jump in performance!

3. TOR for an ideal search engine

Recoll is still far from my “ideal search engine” (ISE). Here is a list of things that I would like the ISE to be able to do…

- make a distinction between “index words” (IW) – those identified in the text body and kept in the huge index files mentioned in the table above (> 2 GBytes) – and “keywords” (KW) that are assigned by the user and usually stored in the info fields;

- for both KW and IW, understand synonyms (war=conflict, capacitaciòn=training, conflict=krieg, krieg=guerre, etc.)

- recognise KW and IW hierarchies (i.e Africa=North Africa, West Arica, East Africa, etc.; North Africa= Algeria, Tunesia, Morocco, etc);

- record authors (through a special KW) and offer the option to restrict all analyses to specific authors;

- understand regular expressions;

- assign densities to IWs (i.e. know how many times “buckwheat” occurs in a document, and use this to define a “relevance” indicator);

- recognise the structure of KWs and IWs , e.g. tell the user that “war” is correlated with “conflict”, “climate” with “atmosphere” and with “weather”, see that “insecurity” comes as “food insecurity”, “environmental insecurity” and “job insecurity”;

- automatically (Optionally) assign KWs to documents based on the most frequent IWs in the document;

- helps the user to rationally structure directories (maybe build a parallel “virtual directory structure”);

- the GUI to lets users manage KWs and IWs (add some that do not exist in documents, cancel others, use a “stop list i.e. a list of words to be ignored). The GUI should be able to analyse the KWs and IWs, show correlations, densities, number of documents etc.

Endnotes

(1) I am user ergosum. In addition to the myfiles directory, I have the standard /home/ergosum directory with the configuration files for my programmes (10 GB, about 10000 files) while system files (all the files outside the /home directory, incuding my programmes and the linux/ubuntu system) has 370000 files (6 GB).

(2) It is difficult to say how many actual types this corresponds to, as linux programmes (except some of them with a windows ancestry or bias) do not recognise file types based on the suffix, but based on contents and permissions. In addition, several types are equivalent (jpg, jpeg; htm, html), and others are identical in coding but their names differ (bna, bnb; ida, img, af, dvi…). Therefore, “file types” and suffixes are indeed not the same thing, but for the purpose of this little inventory, we will assume they are!

I think there is a fundatmental misunderstanding in this post between “information” and “data”. As the post title says, you’re looking for information which is the result of an interpretation of the data.

Such interpretation is always subjective and no basic search engine (that searches for data) can bring you that. Furthermore, if such tool would provide an interpretation of data predefined by a developper, it would most probably not match with yours, so the user should be in control of the interpretation. So, your ideal tool should be made of two components:

1) An interpretation layer that would allow you to link your data with information (keywords), for instance IMG_5476.jpg

a) Is a picture (provided you can rely on the file extension, if not you have to come up with a more complex rule to identify a picture based on the file content)

b) Was taken on the 25th of December (based on the EXIF data of the jpeg and not on the date of the file)

c) Is most likely related to Christmas (but not certain)

d) Represents Perrine, Charlotte and Clotilde (can be possibly determined with a face recognition engine)

2) A search layer on information using the above keywords.

Assuming such tool would exist. You will face additional challenges:

– Uncertainty. The rule 25th of December –> Christmas keyword would be true in a certain percentage of cases. The search engine should take such probability into account when returning the results of a search.The other approach would be defining a search by keywords and minimum probability.

– Relationship between keywords. Tanzania –> Africa is straight forward. So a search on Africa should return the files containing Tanzania. What about “rain” –> “climate”? The song “A rainy night in Georgia” would appear in the result of a search on climate change (notice that the exact rule should be rainy –> rain –> climate)

– Cost of maintenance. I’m certain that maintaining and fine-tuning the rules would be extremely time consuming and what would be the overall benefits. The question is then the balance between the acuracy of the search result and the cost of maintenance of the rules.

– Eventually, comes the implementation. You mentioned that beagle was consuming too much CPU for indexing. What do you think such interpretation layer would require in terms of resource usage?

In information management theory, once you have the information, the next step is insight 🙂

OK, Tom, thanks… I buy the fact that I look for information, and in order to get it, I search the text (data) that makes up my files.

I am not interested in anything as sophisticated as you describe. For instance, I do not want to search for pictures (nothing beyond the name of the picture), but I want to index text (only office type documents: doc, ppt, odt etc., pdf and html). For the time being, I do not want to enter into details before I have compared the performance of strigi, doodle, tracker etc… but I have maintained and regularly revised the Terms of Reference of what I would like to have. There will be a list of synonyms, a stop list (ignored terms) and there will be a list of “any” terms, just as in CDS ISIS (e.g. any Africa is Angola, Algeria, Benin, Botswana…). I have used CDS ISIS for years, and I agree that there is some fine tuning required initially, but I assume I am not the only in the world who wants to search “any climate_variable” in “any Africa”. I can also take the any-lists from CDS ISIS and recycle them! Just for fun, I searched “security” with tracker, and I found 2708 documents. Hopeless. Why can’t the thing tell me:

Dear Ergosum, pls. do select one of

– Environmental security

– Food security

– Regional security

– Security risk

– Security policy

I searched “buckwheat”, and I got 2583. For “Neanderthal”, I found 123. That’s manageable. For “sex” I found only 1232, but here too I would like to be offered the choice between

– Sex workers

– Sex ratio

– Sex bias

– Asexual

– Sexual behaviour

Tom, I realise this is not easy, but let me try to describe what I want… after which, I will no doubt develop some insight too!

OK, I admit my example was over the top but my intention was mainly to highlight the fact that the interpretation was the key point and subjective and was more than just text search. (Even geographical relationship could lead to interpretation in some cases: http://www.lalibre.be/actu/belgique/article/550964/quand-la-flandre-raye-la-wallonie.html ).

Googling around, I came across this link http://www.semantic-knowledge.com/ . It looks closer to what you need and is based on semantic networks. But Windows only and commercial.

Dear René,

You know that I am intuitive. When I loose a scientific paper that I downloaded few months before I prefer to look for it again on google instead of trying to find it on my computer, and in general I find it faster! Why don’t you start from this reality? You understood that I mean to try thinking about developing such a tool in situ. You certainly know that google find preferably the most wanted information by all people around the world (democratic ranking). Unfortunately, your are the only one looking inside your computer. So how to reproduce google rule? Maybe thanks to software that simulate a “memory” of the computer, which register the most entered keywords from your keypad? What do you think about this idea?

I think there are still some cases when you want to look for files in your own computer:

1. find your own work files that were edited long before and you have no idea where you can find it

2. find a reference that have all your notes and comments in it, these are not available from google

3. you might also need to recall some other information of the files, eg, the date, the editor, the revise date, etc.

maybe others…

Needless to say, I very much agree with your comment. I currently have 19315 files in my “data” directory, where I store basically “documents” on various subjects, in a directory structure that makes sense to me. For instance, folder /climate_and_climate_change/security/mediterranean has 3 files and /hominids/lactase_milk_vitamin-d/gene-culture-coevolution/ has 7. In addition I tend to keep documents used for various papers together with the papers, spreadsheets that were used in the preparation of the paper, presentations I delivered on the subject etc. Same thing for blogs. Between the papers, collected “data”, lectures, genealogy etc I have 277543 documents. Recoll finds them, and does not impose on me whatever Google thinks is good for me! And after I have located that file I was looking for… I have the full info!

See this link for a discussion (in French) about the way we are being treated by Google!

I sent my message a little bit fast. This kind of memory software would have to be trained. It would take some time to “understand your expectations. If you enter “Angola”, it will uderstand that beside the name of the country you wrote many times the words “climate”, “security”, for example. Is this satisfying?

Hello Riad,

if you read this post again (the newest version of 20100131), you will see that recoll comes close to what I am looking for… and this is the confirmation that I am not the only funny guy who wants to locate files on his computer. Try it yourself!

About your idea to have a programme scan what I type on the keyboard, this is indeed a good idea, but I think it can be implemented more easily by labelling some files as having been authored by me, for instance through a keyword or by including the author among the properties. I have added the point to my terms of reference above!

Voici quelques commentaires relatifs à Copernic, un programme pour Windows qui fonctionne à peu près comme RECOLL. Une fois qu’on a indixé les fichiers, l’indexation se produit au coup par coup et de façon non-intrusive. Autre avantage: les recherches sont simples, le programme accepte AND, OR, NEAR et les parenthèses, et les résultats peuvent ensuite être filtrées par type de fichier, date etc. Je sais que commentaire ressemble à une pub pour Dash (merci, Dash!), mais c’est comme ça que je vois ce logiciel. Il coûte 68$ (TVA comprise) dans la version complète, nécessaire si vous avez plus de 75000 fichiers.

Je disais que RECOLL est “à peu près équivalent” à COPERNIC: pls flexible pour l’indexation mais plus compliqué pour les recherches (plus flexibles aussi, évidemment, mais ce n’est pas utile) et on ne peut pas filtrer les résultats. Parmi les autres, les outils fournis par Windows sont lamentables. Google Desktop, qui est mentionné dans ce billet n’est pas mal, mais comme presque tous les trucs google, il n’a jamais éte mis à jour. C’est la technique Google: on lance un produit. Si le succès est planétaire et immédiat et la croissance continue, on investit, sinon, on abandonne. Il faut voir le site sur wikipedia, qui a manifestement été préparé par Google. J’ajoute aussi que Google Desktop avait une version linux! Parmi les 13 programmes fournis dans cette liste, il y en a 13 pour windows, 9 pour linux et 4 pour Mac.

Google Desktop avait d’autres caractéristiques typiques de cette Maison: on prend l’utilisateur pour un imbécile en prenant les décisions pour lui; en classant, par exemple, les résultats de la recherche par “relevance” (pertinence?)… mais sans jamais expliquer comment ce concept se définit. J’avais essayé d’obtenir des infos, mais on ne m’a jamais répondu. Google Desktop avait un autre énorme défaut: on pouvait sélectionner où on veut faire la recherche: le disque dur et/ou le web et/ou le courrier électronique, si je me souviens bien. Même si on “dé-sélectionnait” le web, Goggle Desktop essayait et ré-essayait sans cesse (comme l’ouvrage sur le métier!) de se connecter au web… C’est cette caractéristique qui a fini par avoir raison de ma patience!

Evidemment, Copernic n’est pas exempt de défauts. Par exemple, on ne peut pas arrêter l’indexation ou n’effectuer celle-ci qu’à la demande. Autre défaut: “désinformation” est trouvé par “information” entre guillemets!

I have run into problems with Copernic around Easter, with they took a very long time to fix. I needed a working search engine and tried some other programmes, eventually settling for dtSearch. For professional use and searching mostly text files, the programme is better than Copernic. Here are some of the reasons

* possibility to use synonyms (search “war” and find “guerra” and “conflict”; search for Africa and find “Algeria”, “Angola”, “Benin”…)

* control over stopwords (which results in much smaller and efficient indices); there’s also the possibility to exclude numbers: As I have many datafiles, this is a very useful option.

* finds folders (Copernic doesn’t)

* less exotic interface, but I agree that’s very subjective

* option to search files by specifying which extensions to include OR to exclude (Copernic has only the second)

* several separate indices, which can be updated separately too

* better search options (proximity search)

* visualisation of keywords

* significantly faster updating of indices